Problem & Motivation

The PhysioNet Open Access Database is among the most extensive and utilized repositories of biosignal data worldwide,

with 181 datasets from studies spanning the last four decades. These datasets are crucial for training and validating

artificial intelligence/machine learning (AI/ML) algorithms designed to estimate and predict various health-related

outcomes. However, the lack of uniform demographic reporting across these studies introduces a significant risk of bias,

particularly affecting underrepresented and underserved populations. Heterogeneous reporting results in data that may

not accurately represent all demographic groups, thereby skewing AI predictions toward overrepresented populations and

exacerbating existing health disparities when these algorithms are deployed in real-world settings. Additionally,

algorithms trained on non-representative data cannot be generalized across real-world populations, and thus are limited

in their implementation in real-world healthcare settings.

Our study examines demographic reporting patterns within the PhysioNet Open Access Database to identify existing gaps

and their implications. Specifically, we seek to identify existing relationships between demographic data that is more

frequently reported, such as study participant age and sex, and demographic data that is frequently absent from biosignal

studies, such as race and ethnicity. Additionally, even studies that do include race and/or ethnicity data do not report

these data in a standardized manner, resulting in non-uniform reporting across the database. These analyses not only shed

light on the prevalence of non-standardized demographic reporting within biosignal datasets, but also set the stage for

evaluating the impact of such reporting on the accuracy and bias of AI/ML models in healthcare.

Approach

The PND contains 175 studies involving human subjects, as of July 2023. We conducted a systematic

analysis of these 175 biosignal datasets/databases to identify reporting patterns primarily regarding

four key demographic variables: race, ethnicity, sex, and age, as well as supplementary information

such as study size (N), date of publication, location, and biosignal type. Where detailed data, on the

level of individual participants, was available, this data was used to calculate our values. In the case

of multiple sources of data being available (i.e. from a combination of the original publication, dataset,

or PhysioNet description), the PhysioNet description of the study was used.

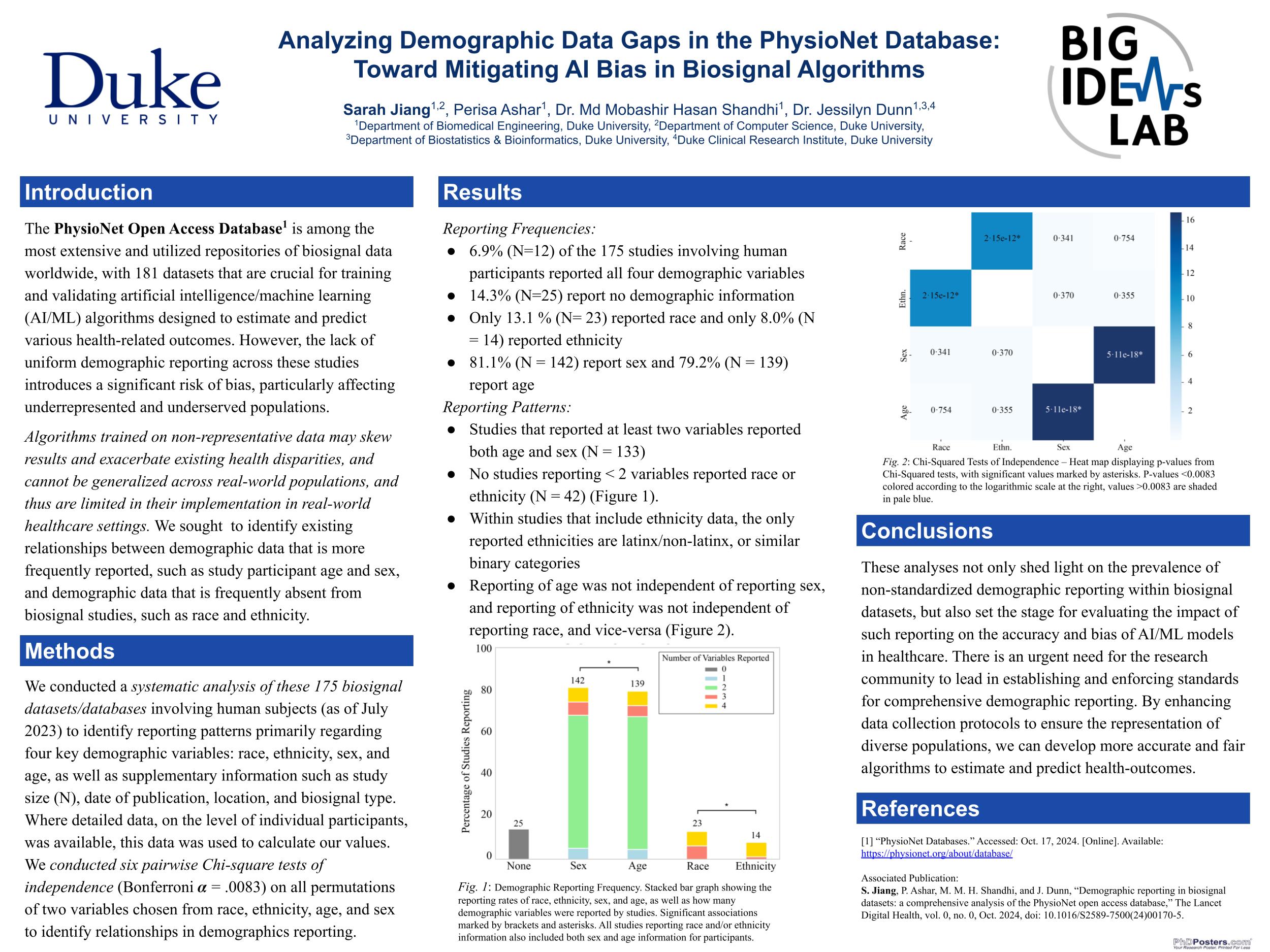

Additionally, we conducted six pairwise Chi-square tests of independence on all permutations of two variables

chosen from race, ethnicity, age, and sex to identify relationships in demographics reporting. The significance

level was set to 0.05, with a Bonferroni corrected alpha of 0.0083 for 6 pairwise comparisons. A linear

regression model was used to identify whether statistically significant relationships existed between

the number of demographic variables reported and the date of publication and data posted on PhysioNet;

however, due to substantial variation in demographics reporting between 2005-2020, these models yielded

minimally successful results. Figures are generated using Python 3.9.11, NumPy 1.25.1, pandas 2.0.3, and a

linear regression model was generated with scikit-learn 1.4.2.

Results & Impact

A small fraction (N=12, 6.9%) of the 175 studies involving human participants reported all four demographic variables,

while 14.3% of studies (N=25) report no demographic information. Further, only 23 studies (13.1%) reported race and

only 14 (8.0%) reported ethnicity, while 142 (81.1%) studies reported sex and 139 (79.2%) reported age.

Studies that reported at least two variables reported both age and sex, while no studies reporting ≤ 2 variables

reported race or ethnicity. Within studies that include ethnicity data, the only reported ethnicities are

latinx/non-latinx, or similar categories. We found reporting of age was not independent of reporting sex, and reporting

of ethnicity was not independent of reporting race, and vice-versa.

Studies that report all four key demographic variables were conducted after 2015, with one exception, and primarily

involved critical care patients (emergency department admitted patients or intensive care unit patients). The studies

without demographic information were primarily conducted prior to 2015 (N=18). Linear regression models indicate no

statistically significant relationships between year and number of variables reported by studies, as demographic reporting

varies greatly after ~2005.

Our analysis of the PND highlights significant demographic reporting gaps that risk embedding biases into AI/ML algorithms.

To counteract these, there is an urgent need for the research community to lead in establishing and enforcing standards

for comprehensive demographic reporting. By enhancing data collection protocols to ensure the representation of diverse

populations, we can develop more accurate and fair algorithms to estimate and predict health-outcomes.